AIOPs as the category that is the future state of the infrastructure monitoring, APM, and log analysis business is dead. AIOPs is dead for the following reasons.

AIOPs has no Champion that Matters

AIOPs as a term and an idea was invented by Gartner. The analyst who invented the term then left Gartner. At that point there was no one at Gartner who assumed the role of championing AIOPs as the future state of the industry. The key vendors in this space (Datadog, Dynatrace, Elastic, IBM (Instana), New Relic, and Splunk) all treated AIOPs as a feature of their products that they already had, not as some future state to aspire to.

Customers became Underwhelmed with AI in IT Operations

The fundamental goal of AI in IT Operations (AIOPs) was to help customers better identify problems and solve them. But it turned out that the vast majority of the AIOPs solutions tried and implemented by customers still had an age old problem. That problem was that the AIOPs products could not deliver an acceptable ratio of “good alarms” to “bad alarms”. Good alarms are alarms about real problems which need to be addressed. Bad alarms are false positives (an alarm about something that really is not a problem) or a false negative (a real problem that was missed by the system and that should have been an alarm).

AIOPs became Synonymous with “Event Management”

Two of the vendors that were most aggressive in adopting the term AIOPS were Moogsoft and Big Panda. Both were event management offerings. The idea was to feed all of your events from all of your systems and monitoring products to these products and to have them sort out which ones to pay attention and how to address them. The problem was (and is) that the events did not, and still do not contain the information needed to address the event. So this definition of AIOPs failed to take over the world.

AIOPS became Synonymous with “Tying Everything Together” which is Impractical

The idea of tying together all of the data (metrics, traces, logs, events, topology, dependencies, configuration changes, updates to applications) produced by all of the various monitoring tools in use in the enterprise (and the cloud) was really compelling. It fell afoul of the following realities:

- Different tools collected different parts of the required data at different time intervals about different parts of the systems and applications

- Different tools exposed different subsets of what they collected in their API’s

- There was no general way to connect the data from N tools into a cohesive and relevant whole. For example you cannot connect transactions monitored by an APM tool to SNMP data monitored by a network tool because the APM data does not contain the ID of the switch or router through which the transaction flowed, and the SNMP data contains no information about applications or transactions (the same problem applies to Netflow).

- Enterprises had lots of different tools so integrating with them all became a nightmare for the vendors who tried

So the bottom line is that if an enterprise had a mess in terms of a toolset that could not be easily integrated into a cohesive whole, AIOPs could not rescue the enterprise from that mess.

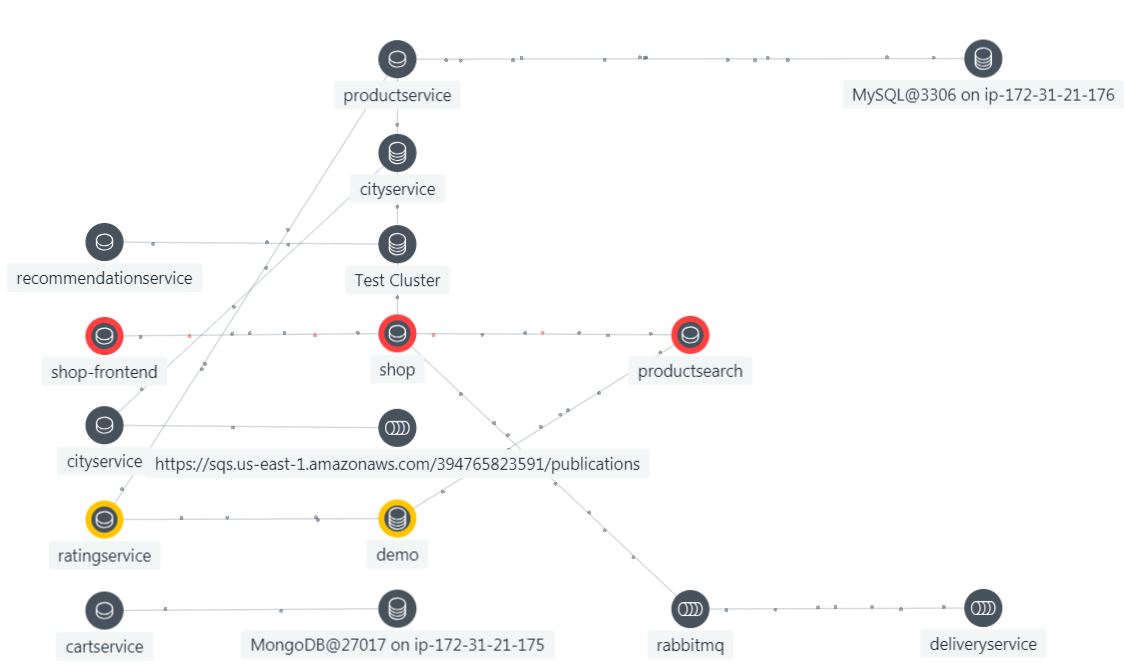

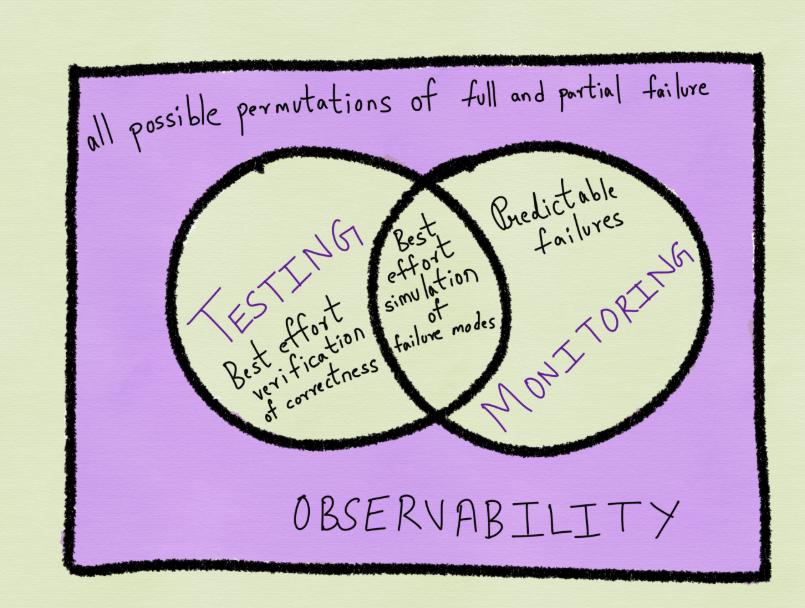

Observability Platforms Became the Current and Future State of the Industry

The key vendors in this space (Datadog, Dynatrace, Elastic, IBM (Instana), New Relic, and Splunk) all adopted Observability as the term to describe their products and their value. The key difference in modern Observability Platforms is that all information (metrics, logs, traces, etc.) is collected in context so the bits of data are related to each other at ingest time. This greatly cuts down on false alarms and greatly assists the root cause process. AIOPs became just a feature of modern Observability Platforms. Now there certainly is some debate as to what constitutes a viable Observability Platform. Please read What is Observability and How to Implement IT for one opinion. The short answer is that Observability should tell you everything that you need to know about every business transaction or process that you care about that is implemented in the software that you are observing.

The Rate of Change in Modern Systems Outstrips Most AI Approaches

Most AI approaches require some time to learn the “normal” state of the operations of applications and systems before they can be trusted to detect abnormal states. In their 2022 Global CIO Report, Dynatrace reports that 77% of CIO state that their systems change every minute or less. This makes it impossible for the AI to learn what was normal before something changed.

The Future of AIOPS

If AIOPS is not going to be what everything is going to become, then what is the future role for AIOps? The answer is that there is a class of problems which AI (and AIOPs) can and should address and there is a class problems which cannot be addressed by approaches that need to learn (or worse yet be manually trained) as to what is normal. A system or an application that is is updated multiple times a day and which changes the software that it is based upon multiple times a day (consider a CI/CD process that does thousands of code pushes a day, each of which might contain new topologies, new dependencies, and a new piece of software that the application relies upon) is not going to lend itself to an approach that that needs to learn “normal” first. But there are many systems and applications what do not fall into this category and AI based approaches certainly can help with these. Especially as the AI based approaches learn to detect the rate of change and learn which systems and applications they should target (so the humans do not have to decide). So whereas AI based approaches are not going to become the future state of IT Operations (AIOps), AI will become a very valuable tool that works under certain circumstances for certain classes of applications and systems. AI might in fact find a role to play in actionable root cause (what to actually do to solve a problem) and in the prediction of problems which have not yet occurred (so that they can be avoided).